“Spatiotemporal scene interpretation of space videos via deep neural network and tracklet analysis” by

Lichao Mou and Xiaoxiang Zhu

Our abovementioned research paper won the first prize in 2016 IEEE GRSS data fusion contest: http://www.grss-ieee.org/community/technical-committees/data-fusion/2016-ieee-grss-data-fusion-contest-results/

Spaceborne remote sensing videos are becoming indispensable resources, opening up opportunities for new remote sensing applications. To exploit this new type of data, we need sophisticated algorithms for semantic scene interpretation. The main difficulties are:

- Due to the relatively poor spatial resolution of the video acquired from space, moving objects, like cars, are very difficult to detect, not to mention track;

- The camera movement handicaps the scene interpretation.

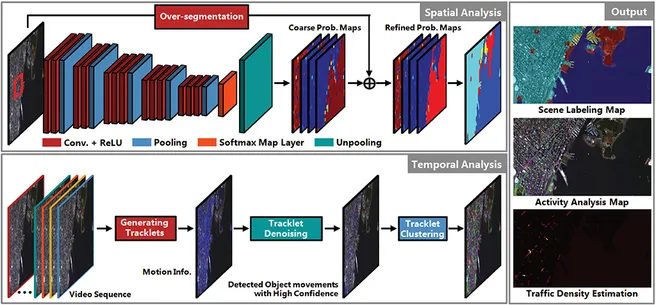

To address these challenges, in this work we propose a novel framework that fuses multispectral images and space videos for spatiotemporal analysis. Taking a multispectral image and a spaceborne video as input, an innovative deep neural network is proposed to fuse them in order to achieve a fine-resolution spatial scene labeling map. Moreover, a sophisticated approach is proposed to analyze activities and estimate traffic density from 150,000+ tracklets produced by a Kanade-Lucas-Tomasi keypoint tracker. The proposed framework is validated using data provided for the 2016 IEEE GRSS data fusion contest, including a video acquired from the International Space Station and a DEIMOS-2 multispectral image. Both visual and quantitative analysis of the experimental results demonstrates the effectiveness of our approach.

Fig.1 : Overview of our proposed pipeline.